PORTFOLIO

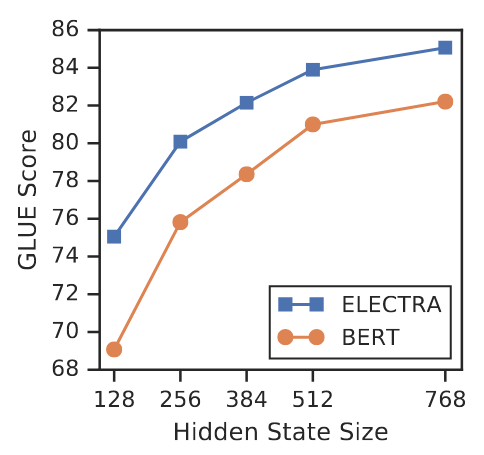

An ELECTRA model for binary classification to determine if a tweet is about a disaster or not. This implementation is part of an exercise to find the best performing model among an RNN, an MLP, and a transformer-based model.

- Back-End

- Machine Learning

- Deep Learning

A repository containing the solutions of all CS224N assignments I made during the 2021 class.

- GloVe word vectors with Gensim.

- Word2vec skip-gram implementation with NumPy.

- Training a dependency parser with the Adam optimizer and Dropout (PyTorch).

- A BI-LSTM+Attention Machine Translator implemented using PyTorch.

- (Pre)training a Transformer model to predict the birthplace of a person.

- Back-End

- Machine Learning

- Deep Learning

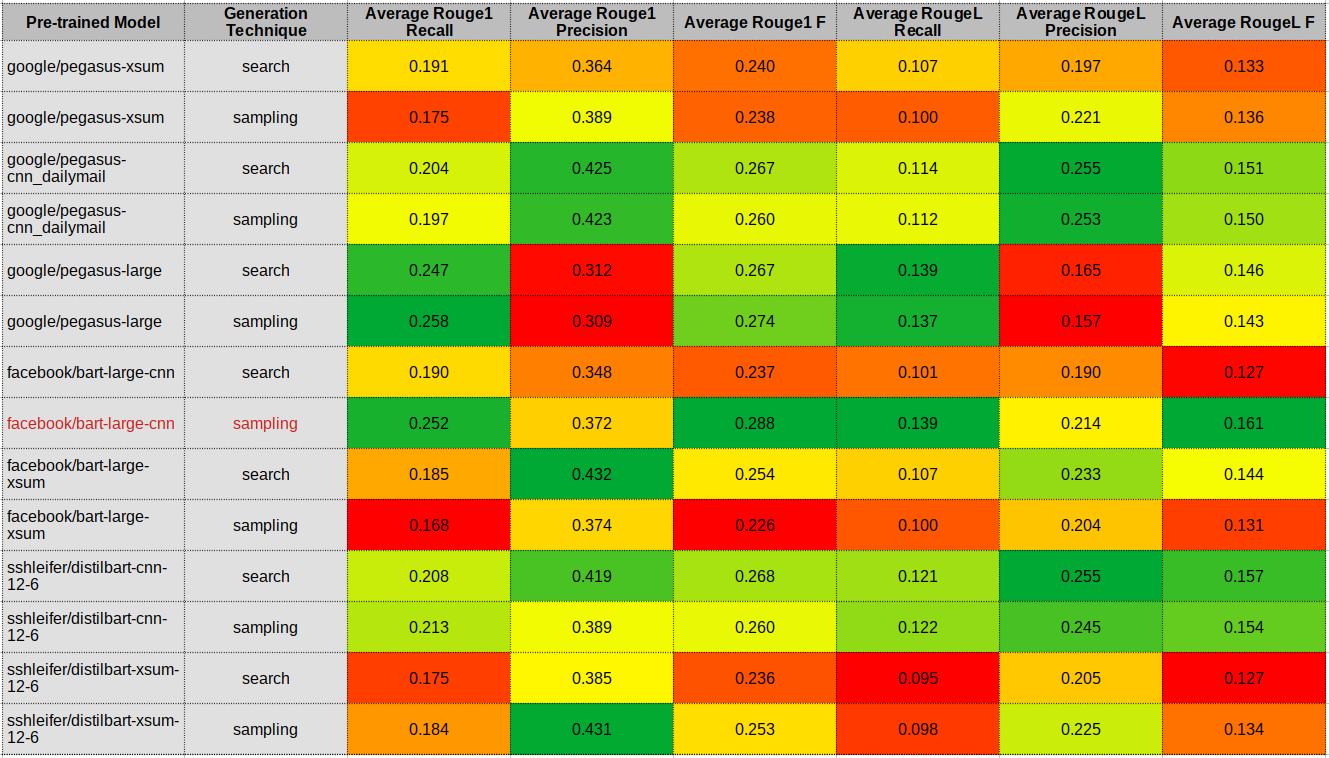

An experimentation project to help choose a SOTA neural model for summarization. The project uses HuggingFace's pre-trained models over the XSum and CNN / Daily Mail datasets. The generated summaries are evaluated intrinsically and extrinsically with ROUGE1 and ROUGEL.

- Back-End

- Machine Learning

- Deep Learning

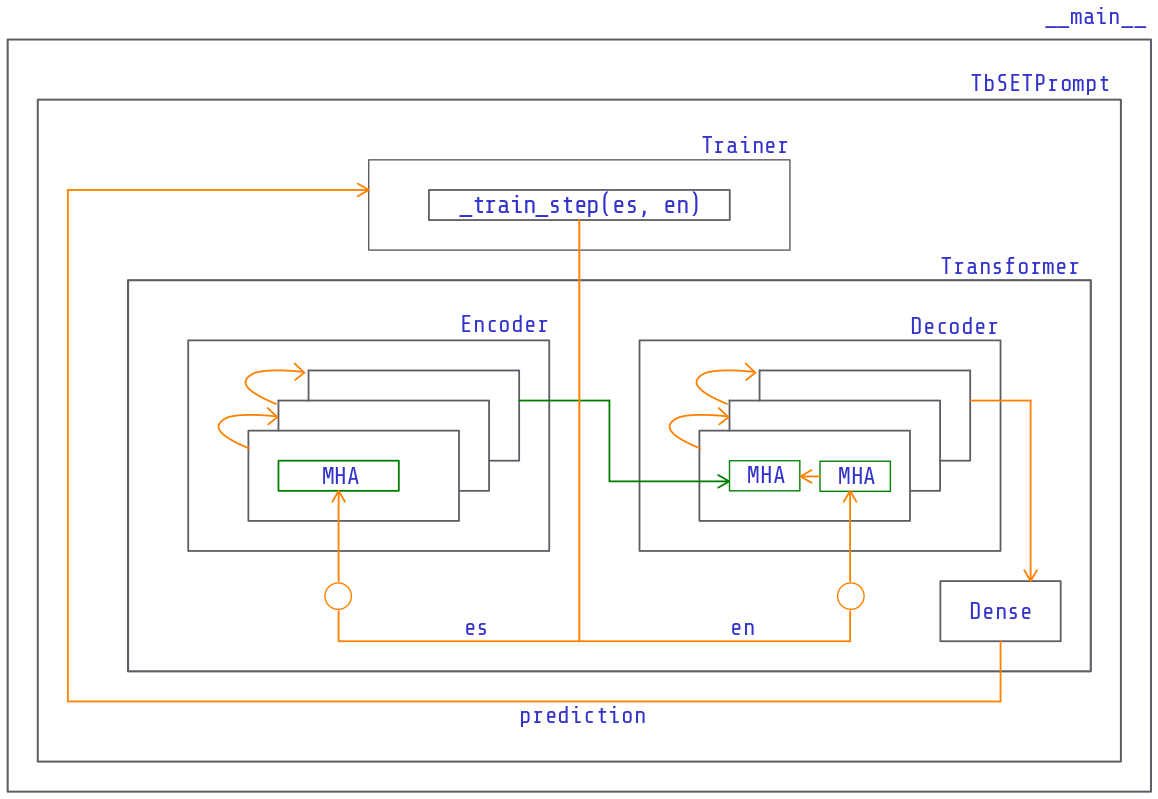

A Spanish-English translator based on a Transformer model. It was trained on Google Cloud with 114k examples and a customized training loop. This is not a notebook but a modular object-oriented project with a simple TUI that allows interaction with the translator.

- Back-End

- Machine Learning

- Deep Learning

A prototype to know family-units' water consumption using IoT, time-series databases, and visualization software. This project aims to understand how we use water to reduce our footprint as a way to help against climate change.

- IoT

- Back-End

A website I made with several objectives in mind:

- Update my coding skills

- Share what I learned

- A tool for the Mozilla Club Peru I founded as a mean to help increasing web literacy in my community

- Front-End

- Back-End

- DevOps